| Model | Size | Debug | Translation | Switch | Polishment Score | Win Rate | |

| Zero-shot | |||||||

| gpt-4-0613 | - | 0.316 | 0.465 | 0.264 | 1.12% | 0.855 | |

| OpenCodeInterpreter-DS-33B | 33B | 0.236 | 0.368 | 0.141 | 6.02% | 0.776 | |

| gemini-ultra | - | 0.304 | 0.378 | 0.041 | 5.31% | 0.750 | |

| deepseek-coder-33B-instruct | 33B | 0.275 | 0.410 | 0.162 | 1.10% | 0.737 | |

| gemini-pro | - | 0.286 | 0.344 | 0.076 | 5.86% | 0.737 | |

| gpt-3.5-turbo-1106 | - | 0.290 | 0.475 | 0.177 | 0.09% | 0.724 | |

| OpenCodeInterpreter-DS-6.7B | 6.7B | 0.233 | 0.357 | 0.126 | 4.45% | 0.671 | |

| WizardCoder-33B-V1.1 | 33B | 0.274 | 0.371 | 0.156 | 0.79% | 0.632 | |

| glm-4 | - | 0.220 | 0.278 | 0.085 | 5.17% | 0.526 | |

| Magicoder-S-DS-6.7B | 6.7B | 0.242 | 0.343 | 0.130 | 0.21% | 0.513 | |

| Phind-CodeLlama-34B-v2 | 34B | 0.230 | 0.279 | 0.074 | 2.84% | 0.500 | |

| octocoder | 15.5B | 0.042 | 0.392 | 0.030 | 1.39% | 0.434 | |

| CodeLlama-13B-Instruct-hf | 13B | 0.176 | 0.333 | 0.021 | 2.31% | 0.421 | |

| CodeLlama-34B-hf | 34B | 0.163 | 0.310 | 0.052 | 1.10% | 0.382 | |

| Magicoder-S-CL-7B | 7B | 0.174 | 0.272 | 0.039 | 1.31% | 0.329 | |

| WizardCoder-15B-V1.0 | 15B | 0.159 | 0.309 | 0.067 | 0.91% | 0.329 | |

| CodeLlama-7B-Instruct-hf | 7B | 0.155 | 0.289 | 0.017 | 1.47% | 0.289 | |

| CodeLlama-34B-Instruct-hf | 34B | 0.131 | 0.287 | 0.027 | 1.02% | 0.211 | |

| CodeFuse-CodeLlama-34B | 34B | 0.166 | 0.218 | 0.028 | 0.33% | 0.184 | |

| Three-shot | |||||||

| gemini-ultra | - | 0.286 | 0.443 | 0.152 | 5.62% | 0.855 | |

| gpt-4-0613 | - | 0.345 | 0.517 | 0.303 | 1.13% | 0.816 | |

| OpenCodeInterpreter-DS-6.7B | 6.7B | 0.233 | 0.372 | 0.165 | 6.47% | 0.770 | |

| OpenCodeInterpreter-DS-33B | 33B | 0.230 | 0.371 | 0.229 | 5.75% | 0.763 | |

| deepseek-coder-33B-instruct | 33B | 0.272 | 0.417 | 0.235 | 1.18% | 0.737 | |

| gpt-3.5-turbo-1106 | - | 0.270 | 0.364 | 0.201 | 1.54% | 0.684 | |

| gemini-pro | - | 0.229 | 0.392 | 0.139 | 5.23% | 0.671 | |

| WizardCoder-33B-V1.1 | 33B | 0.279 | 0.362 | 0.243 | 0.65% | 0.645 | |

| Magicoder-S-DS-6.7B | 6.7B | 0.262 | 0.321 | 0.192 | 1.44% | 0.605 | |

| glm-4 | - | 0.233 | 0.299 | 0.100 | 5.30% | 0.572 | |

| CodeLlama-34B-hf | 34B | 0.133 | 0.307 | 0.113 | 1.75% | 0.474 | |

| Phind-CodeLlama-34B-v2 | 34B | 0.239 | 0.275 | 0.092 | 1.20% | 0.421 | |

| CodeLlama-13B-Instruct-hf | 13B | 0.160 | 0.327 | 0.028 | 1.75% | 0.414 | |

| Magicoder-S-CL-7B | 7B | 0.157 | 0.245 | 0.075 | 1.70% | 0.329 | |

| WizardCoder-15B-V1.0 | 15B | 0.114 | 0.271 | 0.099 | 1.65% | 0.322 | |

| CodeFuse-CodeLlama-34B | 34B | 0.166 | 0.240 | 0.050 | 1.61% | 0.289 | |

| octocoder | 15.5B | 0.050 | 0.290 | 0.054 | 1.09% | 0.211 | |

| CodeLlama-7B-Instruct-hf | 7B | 0.167 | 0.271 | 0.028 | 1.00% | 0.211 | |

| CodeLlama-34B-Instruct-hf | 34B | 0.143 | 0.303 | 0.032 | 0.32% | 0.211 | |

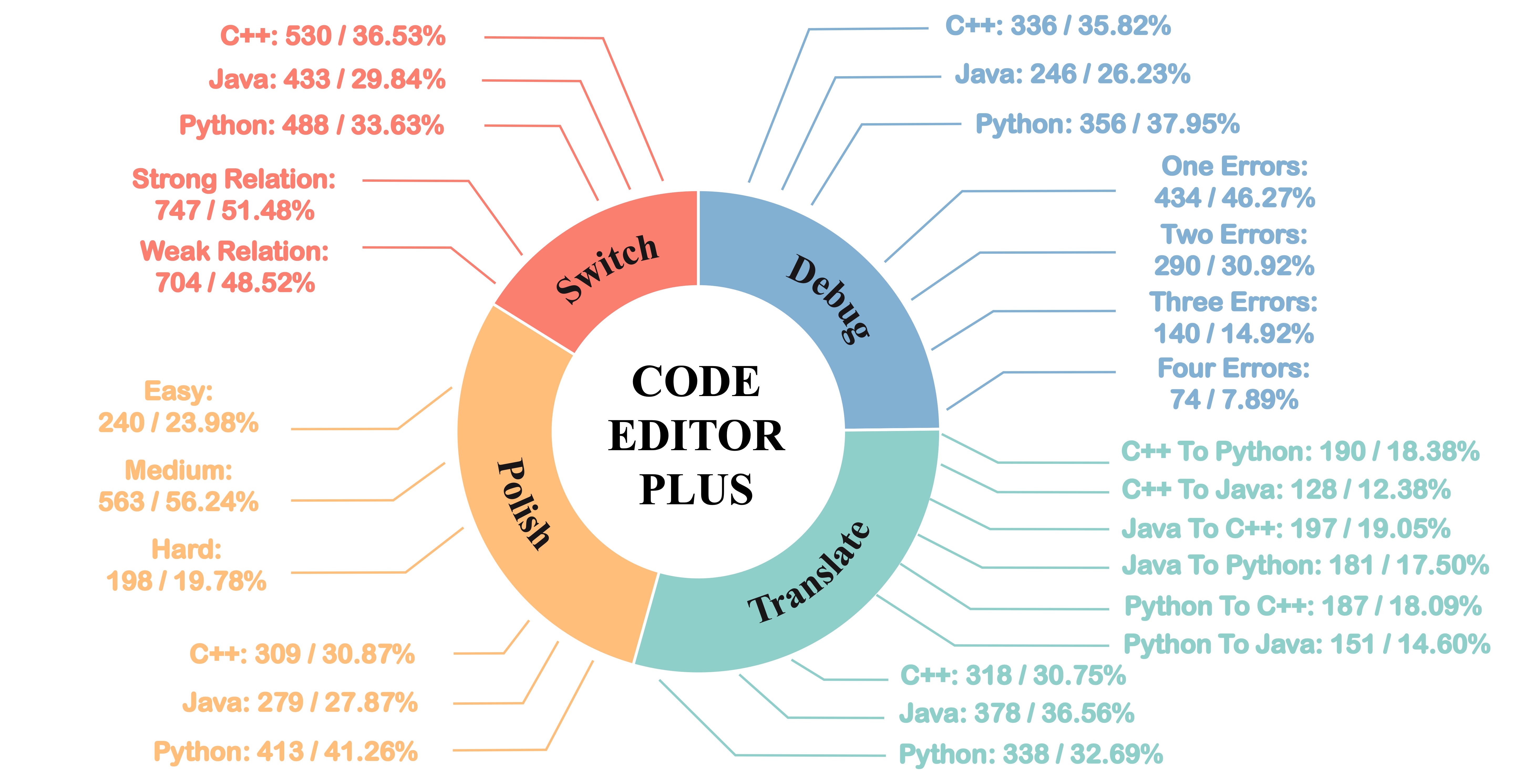

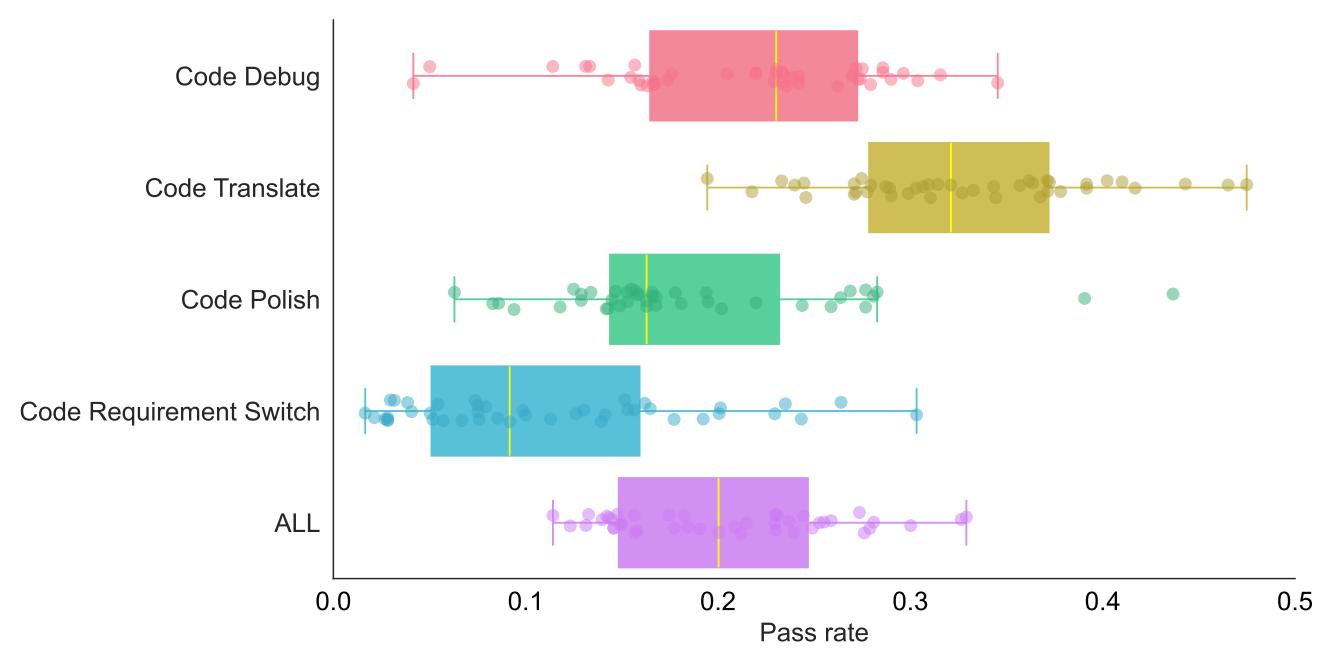

Table 1: Evaluating LLMs on CodeEditorBench. All results of models are generated by greedy decoding. Code Debug, Code Translate and Code Requirement Switch are evaluated with pass@1, while Code Polish is evaluated with Mean OptScore.